Why managing container images on OpenShift is better than on Kubernetes

So you’ve decided to go with Kubernetes and started building your container images. Now the question is where to push them and how to manage them properly? It’s something that you don’t see on these “always working demos” and slides. You will have to deal with it sooner or later and it might be a real challenge. After all, both Kubernetes and OpenShift are build around the concept of container images that are instantiated in many containers with different configurations and settings.

Kubernetes transparent approach to container images

Let’s start with Kubernetes and its way of dealing with container images. It follows a simple unix philosophy - particular components do one thing and do it well. Putting them together (e.g. pods templates in Deployment with access exposed using Service based on labels) make it so powerful.

This simplicity has some drawbacks though. Containers are created from container images and are referenced in different resources. For example here is a sample Pod definition for a single container based on foo:1.0 image.

apiVersion: v1

kind: Pod

metadata:

name: foo

spec:

containers:

- image: foo:1.0

name: foo

One thing is clear here - this image has to exist already and Kubernetes needs to have an access to the origin registry. So the big question is - Why can’t we create images in the same way as we create pods, replicasets and other resources? And the answer is simple - there is no resource in Kubernetes responsible for building container image (yet?). That’s the missing piece in my opinion and is confusing a bit. So how do most people mamnage to build container images on Kubernetes? In the easiest, but definitely not the most secure and flexible way - just plain Docker with some tricks.

“Good”, old docker

There are a couple of choices you have when you want to build container images for Kubernetes. In general there are two ways:

- Build images outside Kubernetes using external tools, scripts, and other magic

- Build images inside Kubernetes using some combination of its resources

In almost all scenarios you will end up with our old friend - docker build command. Although there are many other options (e.g. buildah) I suppose people will continue to use it for a long time.

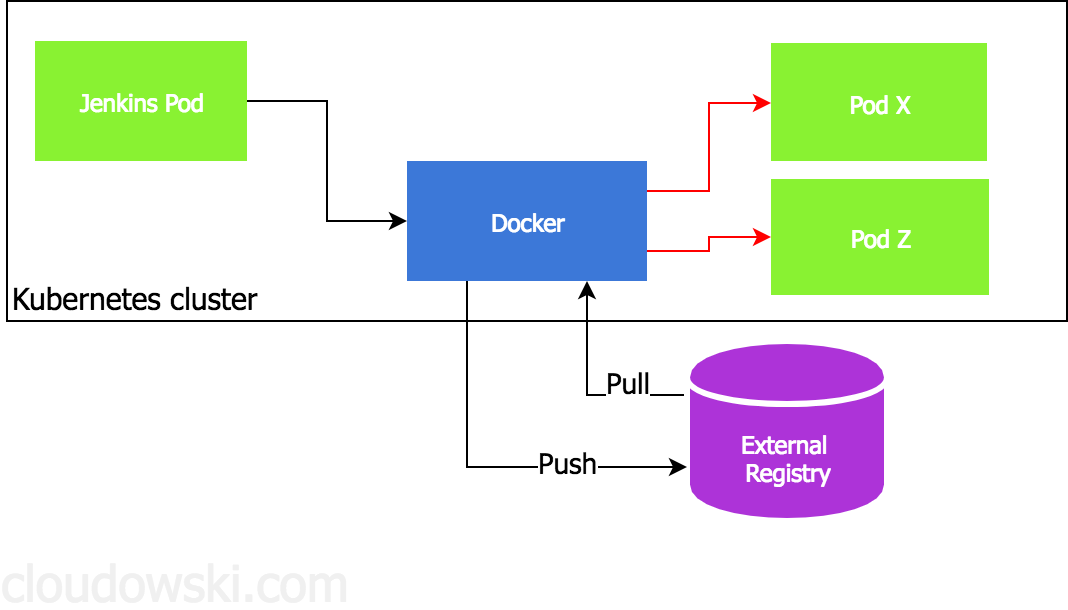

One major issue with that approach (especially implemented inside Kubernetes) is that you need to expose docker socket (or use tcp connection) directly to build agent (e.g. Jenkins) which is a huge security risk - this agent will be able to do anything with other containers running on the same host. Yes, I know there are many ways to make it more secure, but most people do it that way. Sad, but true (and insecure).

OpenShift with Kubernetes approach

If you’re new to OpenShift or you’ve come from Kubernetes world you might be tempted to follow the same approach. Fortunately, default restricted policy assigned to all new pods will prevent you from launching your Jenkins container with host’s docker socket mounted inside. You may try to change it, enforce it somehow, but a better approach is just sit down and ask yourself - Do I really want to expose my containers to danger just to build a container image? The only right answer is NO. Now it’s time to use something better and more secure.

Building and managing images with BuildConfig and ImageStream

Welcome to a better world with declarative resources for building container images (BuildConfig) and abstraction layer for them (ImageStream). Let’s start with BuildConfig first. It’s a resource that defines a way to build a container image and push it somewhere. Here’s an example definition:

apiVersion: v1

kind: BuildConfig

metadata:

name: foo-build

spec:

source:

git:

uri: "git@git.example.com:foo.git"

type: Git

strategy:

dockerStrategy:

noCache: true

output:

to:

kind: ImageStreamTag

name: foo:latest

That’s it - now you just need to create that object like you would with similar yaml definitions (e.g. oc apply -f foo-buildconfig.yaml). This will create a new foo-build object that when started will do the following things:

- fetch files from git repository

- build a container image based on Dockerfile definition from that repo (in this example it would have to be in root directory)

- push foo:latest built image to ImageStreamTag object (single instance of ImageStream pointing to one image) in current namespace

It is possible to push it directly to docker registry, but it would be such a waste of features that ImageStream provides. So where does this image goes? That’s the point - it’s not important from your perspective. It’s an abstraction layer and you shouldn’t really care what happens with it (by default it will be pushed to OpenShift internal registry). What you should care is how it may help you and believe me - it has many interesting features.

It’s worth to mention that BuildConfig object is really powerful and lets you build container images quite easy for the following reasons:

- It can use Source-To-Image to build an application without any Dockerfile involved, it autodetects technology/framework and chooses appropriate builder image for you

- It can use Dockerfiles (as in the example above), but it launches a dedicated pod that controls the whole process - you don’t give access to all container running on the host like you would with Jenkins+Docker on Kubernetes

- It can download code from private repo and uses Secret objects for authentication

- It can push built image directly into private container image registry also using authentication stored in a Secret

- It can trigger other builds to create a chain of dependent builds - perfect solution when you have a base image and other dependant ones that need to be rebuilt automatically

ImageStream as a very useful abstraction layer

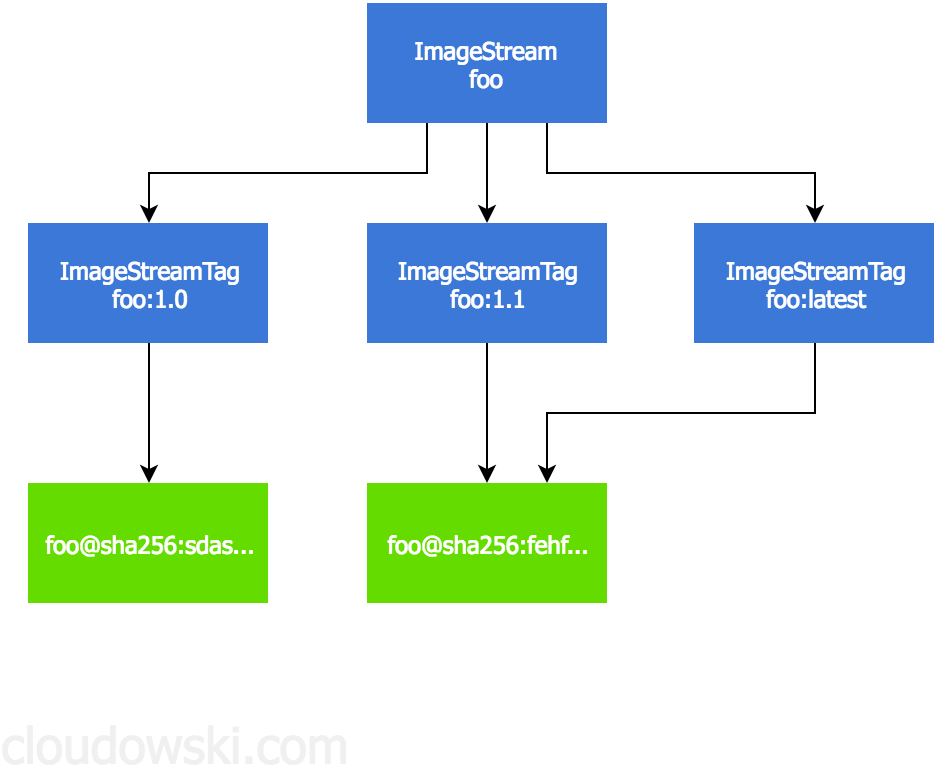

OpenShift introduced a concept of ImageStream. So what is it? The simplest definition would be a collection of references to real container images. You can also imagine that ImageStream is like a directory (from a unix world) with a symlinks (ImageStreamTags) in it. These symlinks points to actual container images. Just like directory and symlinks they do not contain real data. ImageStreamTags store only container images metadata.

Importing images to ImageStream

ImageStreamTags objects are references to individual container images kept in internal or external registries. They are identified by immutable sha256 ids, as tag can always change, but sha256 checksum can’t.

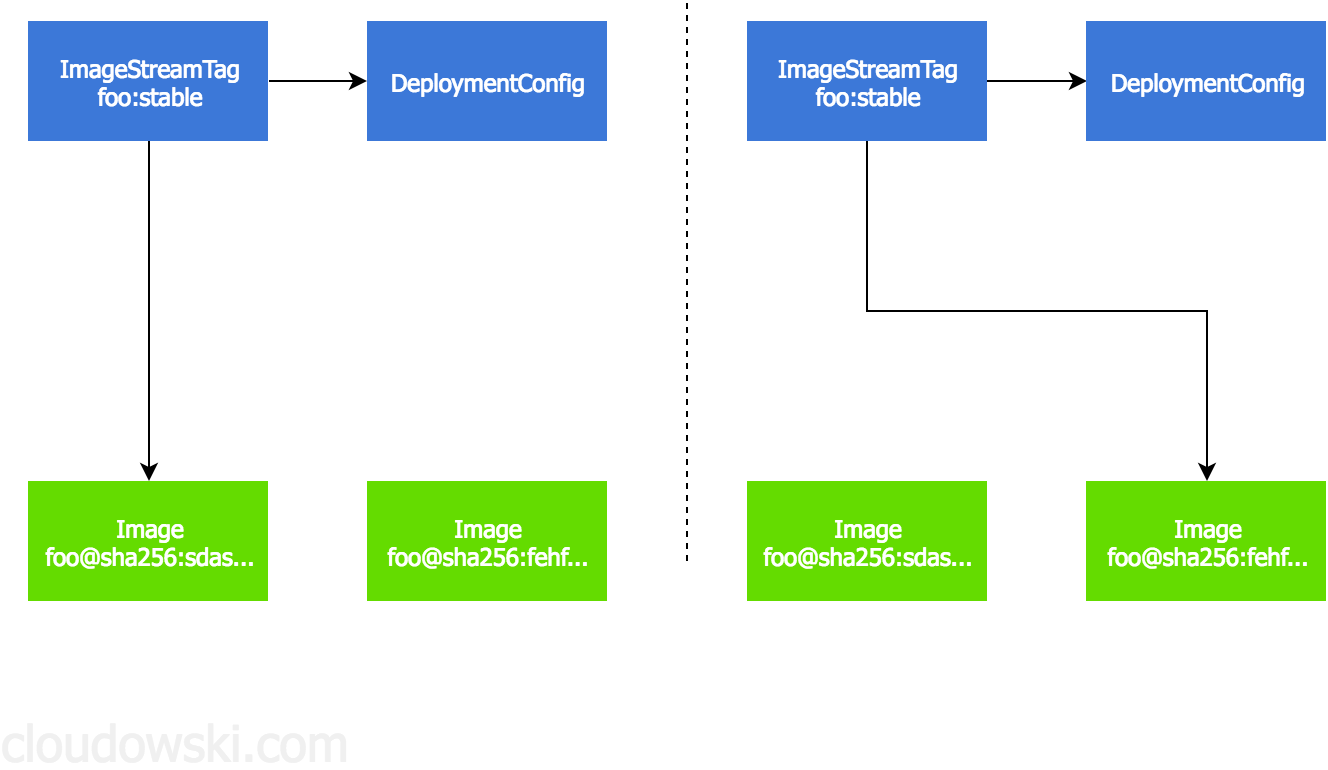

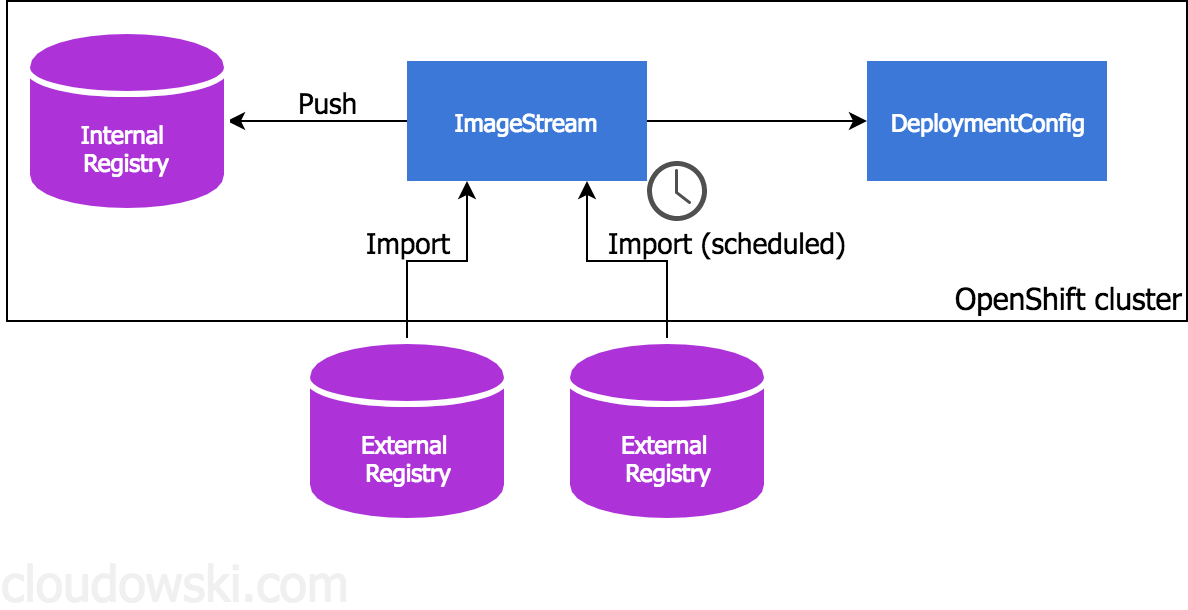

You can import those external images and reference them in a DeploymentConfig resource definition rather than specifying it’s origin directly. External images can be also imported into an internal registry to speed up deployments - otherwise, they will be directly downloaded from remote registry. And this import process can be done automatically by OpenShift if configured properly.

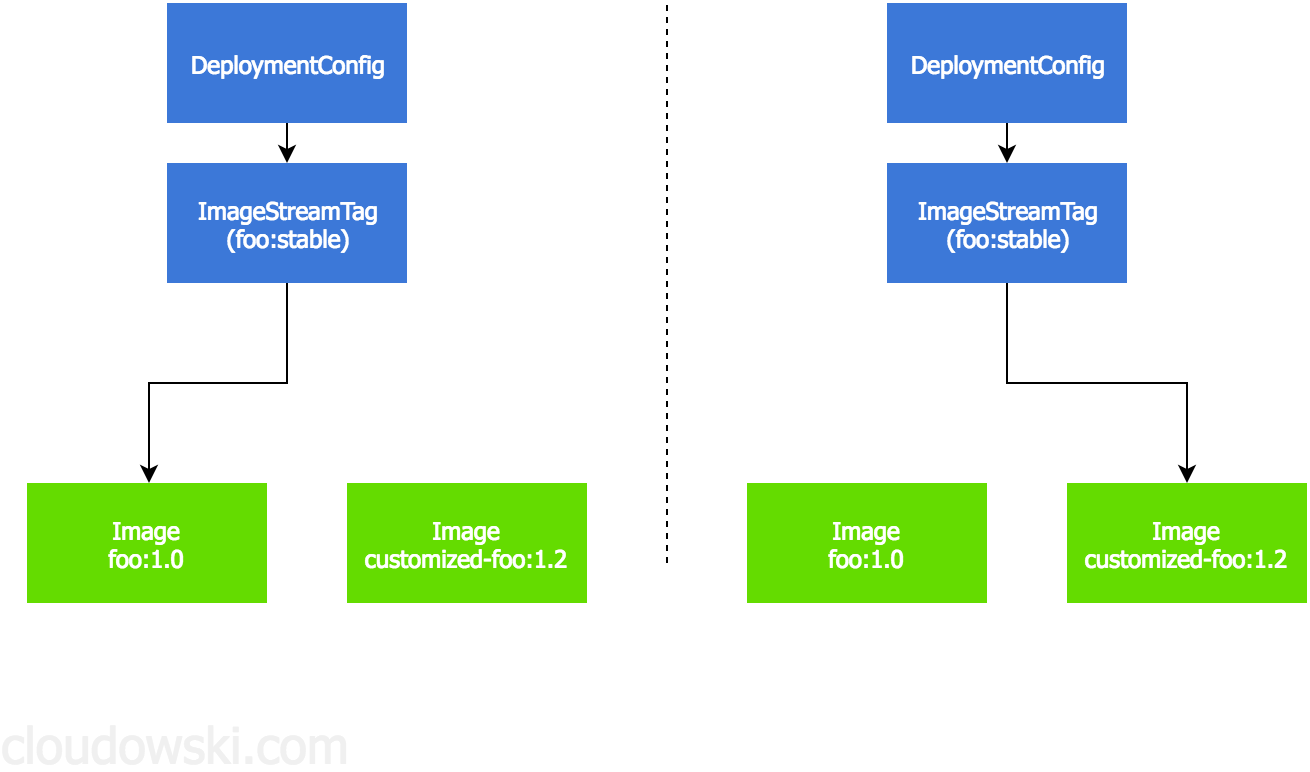

Hiding and replacing container images

Interesting feature of ImageStream is the one that allows it to hide real image that’s behind this ImageStream. For example, at first you decide to use official nginx image (of course provided you don’t like the one provided by OpenShift and you’ll adjust your scc policy) as nginx ImageStream. All your apps will use it for static content in their deployments definitions. However when you decide to change something that is specific for your organization you build a new image and replace a reference in ImageStream. Then each new app will use it in a totally transparent way without changing anything!

Magic with triggers

Now that’s when things are getting really interesting. In OpenShift you can attach triggers to some objects (i.e. BuildConfig, DeploymentConfig) on some events. That what’s making it so powerful and fully automated. Let’s have a look at two real-life examples.

Keeping your deployment always up-to-date

So you have your nginx, rabbitmq or any other image you use with your own configuration. How about keeping it up-to-date without any manual action? Not sure about you, but for automation freak like me it sounds perfect. All you need to do is provide the following option to your DeploymentConfig definition:

triggers:

- type: ImageChange

imageChangeParams:

from:

kind: ImageStreamTag

name: 'foo:latest'

namespace: openshift

Then you need to make sure your foo:latest ImageStream is set for scheduled imports - you can set it with cli

oc import-image foo --scheduled

and it’s ready!

Now when a new image is discovered, it will trigger a new deployment using that new image.

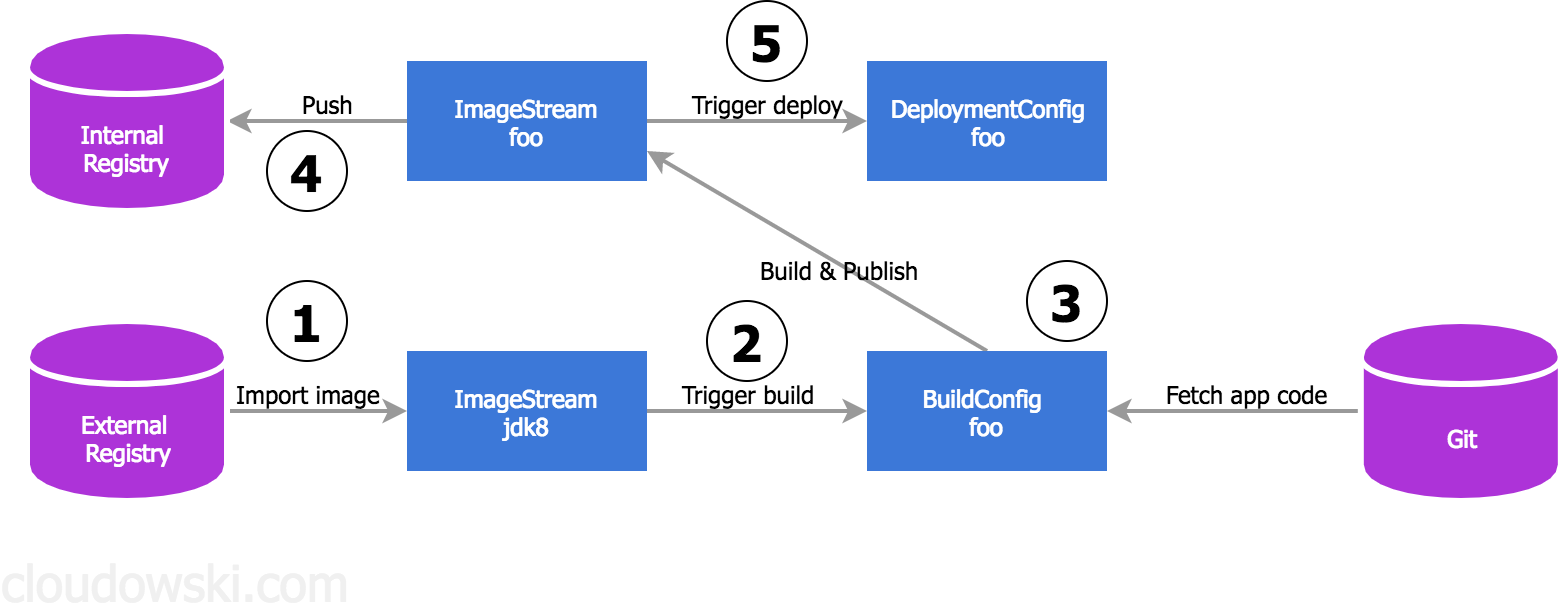

All the best features of ImageStream in an example, real-world scenario

Image a scenario when you want to upgrade all images of your app not because the code has changed, but because a base image that it’s been built on top of has been updated i.e. an important security bug has been fixed.

So what we have here is a fully automated workflow that consists of the following steps:

- New container image is being imported from the external registry (preferably as scheduled import set on the jdk8 ImageStream)

- ImageStream jdk8 triggers a new build of an app defined in foo BuildConfig

- Build fetches app code from git repo, builds a new container image and publishes it in app’s foo ImageStream

- New container image is pushed into the internal registry and ImageStreamTag reference changes and points to that new image

- This action triggers a new deployment on a foo DeploymentConfig object

Within a couple of minutes, you have your app running on an updated version of the image without touching anything! And that’s my favorite feature of OpenShift ImageStream. I wish I had something similar in Kubernetes, as playing with Docker directly is so not “cloud native” :-)

Leave a comment