Security is a major factor when it comes to a decision of whether to invest your precious time and resources in new technology. It’s no different for containers and Kubernetes. I’ve heard a lot of concerns around it and decided to write about the most important factors that have the biggest impact on the security of systems based on containers running on Kubernetes. This is particularly important, as it’s often the only impediment blocking potential implementation of container-based environment and also taking away chances for speeding up innovation. That’s when I decided to help all of you who wants strengthen security of their containers images.

Image size (and content) matters

The first factor is the size of a container image. It’s also a reason why container gained so much popularity - whole solutions, often quite complex are now consisting of multiple containers running from images available publicly on the web (mostly from Docker hub) that you can run in a few minutes. In case your application running inside a container gets compromised and the attacker gets access to an environment with a shell, he needs to use his own tools and often it’s the first thing he does - he downloads them on the host. What if he can’t access tools that will enable him to do so? No scp, curl, wget, python or whatever he could use. That would make things harder or even impossible to make harm to your systems. That is exactly why you need to keep your container images small and provide libraries and binaries that are really essential and used by the process running in a container.

Recommended practices

Use slim versions for base images

Since you want to keep your images small choose “fit” versions of base images. They are often called slim images and have less utils included. For example - the most popular base image is Debian and it’s standard version debian:jessie weights 50MB while debian:jessie-slim only 30MB. Besides less exciting doc files (list available here) it’s missing the following binaries

/bin/ip

/bin/ping

/bin/ping6

/bin/ss

/sbin/bridge

/sbin/rtacct

/sbin/rtmon

/sbin/tc

/usr/bin/lnstat

/usr/bin/nstat

/usr/bin/routef

/usr/bin/routel

/usr/sbin/arpd

They are mostly useful for network troubleshooting and I doubt if ping is a deadly weapon. Still, the less binaries are present, the less potential files are available for an attacker that might use some kind of zero-day exploits in them.

Unfortunately, neither official Ubuntu nor CentOS don’t have slim versions. Looks like Debian is a much better choice, it contains maybe too many files but it’s very easy to fix - see below.

Delete unnecessary files

Do you need to install any packages from a running container? If you answered yes then you probably doing it wrong. Container images follow a simple unix philosophy - they should do one thing (and do it well) which is run a single app or service. Container images are immutable and thus any software should be installed during a container image build. Afterward, you don’t need it and you can disable it by deleting binaries used for that.

For Debian just put the following somewhere at the end of your Dockerfile

RUN rm /usr/bin/apt-* /usr/bin/dpkg*

Did you know that even without binaries you may still perform actions by using system calls? You can use any language interpreter like python, ruby or perl. It turns out that even slim version of Debian contains perl! Unless your app is developed in perl you can and should delete it

RUN /usr/bin/perl*

For other base images you may want to delete the following file categories: Package management tools: yum, rpm, dpkg, apt Any language interpreter that is unused by your app: ruby, perl, python, etc. Utilities that can be used to download remote content: wget, curl, http, scp, rsync Network connectivity tools: ssh, telnet, rsh

Separate building from embedding

For languages that need compiling (e.g. java, golang) it’s better to decouple building application artifact from building a container image. If you use the same image for building and running your container, it will be very large as development tools have many dependencies and not only they will enlarge your final image but also download binaries that could be used by an attacker.

If you’re migrating your app to container environment you probably already have some kind of CI/CD process or at least you build your artifacts on Jenkins or other server. You can leverage this existing process and add a step that will copy the artifact into the app image.

For full container-only build you can use multistage build feature. Notice however that it works only with newer version of Docker (17.05 and above) and it doesn’t work with OpenShift. On OpenShift there is similar feature called chained builds, but it’s more complicated to use.

Consider the development of statically compiled apps

Want to have the most secure container image? Put a single file in it. That’s it. Currently, the easiest way to accomplish that is by developing it in golang. Easier said than done, but consider it for your future projects and also prefer vendors that are able to deliver software written in go. As long it doesn’t compromise quality and other factors (e.g. delivery time, cost) it definitely increases security.

Maintaining secure images

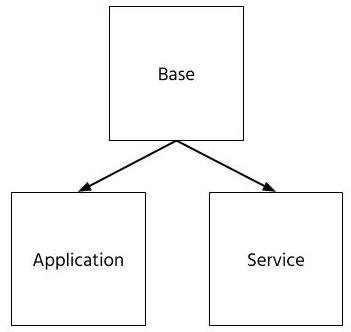

There are three types of container images you use:

- Base images

- Services (a.k.a. COTS - Common off-the-shelf)

- Your applications

Base images aren’t used to run containers - they provide the first layer with all necessary files and utilities For services you don’t need to build anything - you just run them and provide your configuration and a place to store data. Application images are built by you and thus require a base image on top of which you build your final image.

Of course, both services and applications are built on top of a base image and thus their security depends largely depends on its quality. We can distinguish the following factors that have the biggest impact on container image security:

- The time it takes to publish a new image with fixes for vulnerabilities found in its packages (binaries, libraries)

- Existence of a dedicated security team that tracks vulnerabilities and publish advisories

- Support for automatic scanning tools which often needs access to security database with published fixes

- Proven track of handling major vulnerabilities in the past

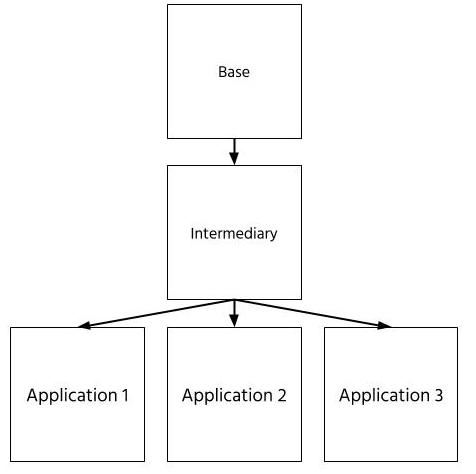

In a case where there are many applications built in an organization, an intermediary image is built and maintained that is used as a base image for all the applications. It is used to primarily to provide common settings, additional files (e.g. company’s certificate authority) or tighten security. In other words - it is used to standardize and enforce security in all applications used throughout the organization.

Now we know how important it is to choose a secure base image. However, for service images, we rely on a choice made by a vendor or an image creator. Sometimes they publish multiple editions of the image. In most cases it is based on some Debian/Ubuntu and addtionally on Alpine (like redis, RabbitMQ, Postgres).

Doubts around Alpine

And what about alpine? Personally, I’m not convinced it’s a good idea to use it in production. I mean there are many unexpected and strange behaviors and I just don’t find it good enough for a reliable, production environment. Lack of security advisories, a single repo maintained by a single person is not the best recommendation. I know that many have trusted this tiny, Linux distribution that is supposed to be “Small. Simple. Secure.”. I’m using it for many demos, training and testing but still, it hasn’t convinced me to be as good as good old Debian, Ubuntu, CentOS or maybe even Oraclelinux. And just recently they had a major vulnerability discovered - an empty password for user root was set in most of their images. Check if your images use the affected version and fix it ASAP.

Recommended practices

Trust but verify

As I mentioned before there sometimes official images don’t offer the best quality and that’s why it’s better to verify them as a part of CI/CD pipeline. Here are some tools you should consider: Anchore (opensource and commercial) Clair (opensource) Openscap (opensource) Twistlock (commercial) jFrog Xray (commercial) Aquasec (commercial)

Use them wisely, also to check your currently running containers - often critical vulnerabilities are found and waiting for next deployment can cause a serious security risk.

Use vendor provided images

For service images, it is recommended to use images provided by a vendor. They are available as so-called “official images”. The main advantage is that not only they are updated when a vulnerability is found in the software but also in an underlying OS layer. Think twice before choosing a non-official image or building one yourself. It’s just a waste of your time. If you really need to customize beyond providing configuration, you have at least two ways to achieve it:

For smaller changer you can override entrypoint with your script that modifies some small parts; script itself can be mounted from a configmap.

Here’s a Kubernetes snippet of a spec for a sample nginx service with a script mounted from nginx-entrypoint ConfigMap

spec:

containers:

- name: mynginx

image: mynginx:1.0

command: ["/ep/entrypoint.sh"]

volumeMounts:

- name: entrypoint-volume

mountPath: /ep/

volumes:

- name: entrypoint-volume

configMap:

name: nginx-entrypoint

For bigger changes, you may want to create your image based on the official one and rebuild it when a new version is released

Use good quality base images

Most official images available on Docker Hub are based on Debian. I even did a small research on this a couple of years ago (it is available here) and for some reason, Ubuntu is not the most popular distribution. So if you like Debian you can feel safe and join the others who also chose it. It’s a distribution with a mature ecosystem around If you prefer rpm-based systems (or for some reasons your software requires it) then I would avoid CentOS and consider Red Hat Enterprise Linux images. With RHEL 8, Red Hat released Universal Base Image (UBI) that can be used freely without any fees. I just trust more those guys, as they invest a lot of resources in containers recently and these new UBI images should be updated frequently by their security team.

Avoid building custom base images

Just don’t. Unless your paranoia level is close to extreme, you can reuse images like Debian, Ubuntu or UBI described earlier. On the other hand, if you’re really paranoid I don’t think you trust anyone, including guys who brought us containers and Kubernetes as well.

Auto-rebuild application and intermediary images when vulnerabilities are found in a base image

In the old, legacy vm-based world the process of fixing vulnerabilities found in a system is called patching. Similar practice takes place in container-based world but in its specific form. Instead of fixing it on the fly, you need to replace the whole image with your app. The best way to achieve it is by creating an automated process that will rebuild it and even trigger a deployment. I found OpenShift ImageStreams feature to address this problem in the best way (details can be found in my article), but it’s not available on vanilla Kubernetes.

Conclusion

We switched from fairly static virtual machines to dynamic, ephemeral containers with the same challenges - how to keep them secure. In this part, we have covered the steps required to address them on the container image level. I always say that container technology enforces the new way of handling things we used to accomplish in rather manual (e.g. golden images, vm templates), error-prone processes. When migrating to containers you need to embrace an automatic and declarative style of managing your environments, including security-related activities. Leverage that to tighten security of your systems starting with basics - choosing and maintaining your container images.

Leave a comment