Why GKE is probably the best Kubernetes service available

So you’ve heard of Kubernetes already and maybe you also tried to deploy it on your on-prem infrastructure or in the cloud. And although deploying an app on an already existing cluster is easy, provisioning the whole infrastructure with highly available control plane is certainly not. That’s when you’ll appreciate a hosted version of Kubernetes provided by multiple public cloud vendors. I recently had a chance to have a quick look at one of them - Google Kubernetes Engine (GKE) available on Google Cloud Platform. Since Kubernetes project has been initiated by Google and is also based on their vast experiences in managing millions of containers running services we use every day, I have set my expectations high. After just a couple of days, I must admit that GKE has exceeded them and I want to show why I believe it’s the best hosted Kubernetes version available now.

Fast provisioning

Creating a new cluster takes less than 5 minutes. That is amazingly fast! I know that some people say it’s irrelevant, as you don’t need to create them often, but it’s still impressive and gives you this level of confidence that it’s been optimized and well-crafted solution overall. Maybe it’s because Google they use Container optimized OS or maybe they just do it the right way. I don’t know and honestly, I don’t care. I can provision clusters fast and I really like it. For my test purposes, I provisioned sometimes even 10 clusters in an hour. Why? Because now I can :-) (and I also needed to test multiple options at once)

Flexible node autoscaling

What I don’t like about AWS hosted Kubernetes service (EKS) is the way how nodes are being provisioned. On GKE it’s very easy with node pools. I like autoscaling feature that just works out of the box - with a single option checked your cluster grows or shrinks depending on the load. Additionally, a new node pool can be created for you to satisfy the demands of your pods. It’s called Node auto-provisioning and currently is in beta, but it’s a very interesting feature that brings even more automation and frees you from taking care of those details.

Resiliency built-in

Kubernetes control plane consists of multiple master nodes that store all the information of cluster state and all running containers. It’s the most fragile part and it’s no surprise that is managed by Google in a highly-available setup. Be sure, however, to choose regional clusters, as there’s a Zonal option which also provisions multiple masters but in a single zone. The hosted control plane is a standard feature for all Kubernetes as a Service solution, but on GKE you get also an “Auto-repair” option for nodes. It’s very handy in situations where your nodes start misbehaving and cannot host your containers any longer. Following a rule that is known also for virtual machines (or instances as you will), instead of rebooting and recovering, the faulty node is replaced with a new one. It’s also a nice example of the Pets vs. cattle principle implementation that just works.

Easy upgrades

I often work with OpenShift clusters with its “famous” ansible-based installer that is used for various purposes, including cluster upgrades. And after a few upgrade procedures, I cannot firmly state it’s an easy and pleasant process. There are so many moving parts, places that are prone to even the smallest errors, that upgrades offered on GKE are one of the best features for me personally. I really appreciate the comfort it gives and also the way it’s performed. Upgrade of a control plane is a separate process that needs to take place first and although it disables access to the API server for a few minutes, your containers are still running and available for your users. Nodes upgrade on the other hand is much more pleasant and shouldn’t interrupt your work, as it’s done in a rolling-update fashion - each node is first drained and cordoned before actual upgrade making this second part almost transparent.

Secure networking

I like the design of the whole network architecture on Google Cloud Platform - global VPN with subnets spanned across zones makes it much easier to operate, especially when creating multi-region deployments. That is also true for GKE. There are standard features available on other cloud vendors such as private address space for control plane and access to it limited to a defined CIDR range. It’s also no surprise that you can enable NetworkPolicy and limit traffic inside a cluster with simple rules. There is also something called VPC-native networking which makes your pod reachable from other subnets of your VPC (or your on-prem network via VPN) using IP aliases. And this one pairs nicely with the best feature of them all - Intranode visibility. Finally, you can see all the traffic between your containers using flow logs. Trust me - this feature is highly coveted in on-premise environments, as network security operations don’t trust these fancy container technology we are so fascinated about.

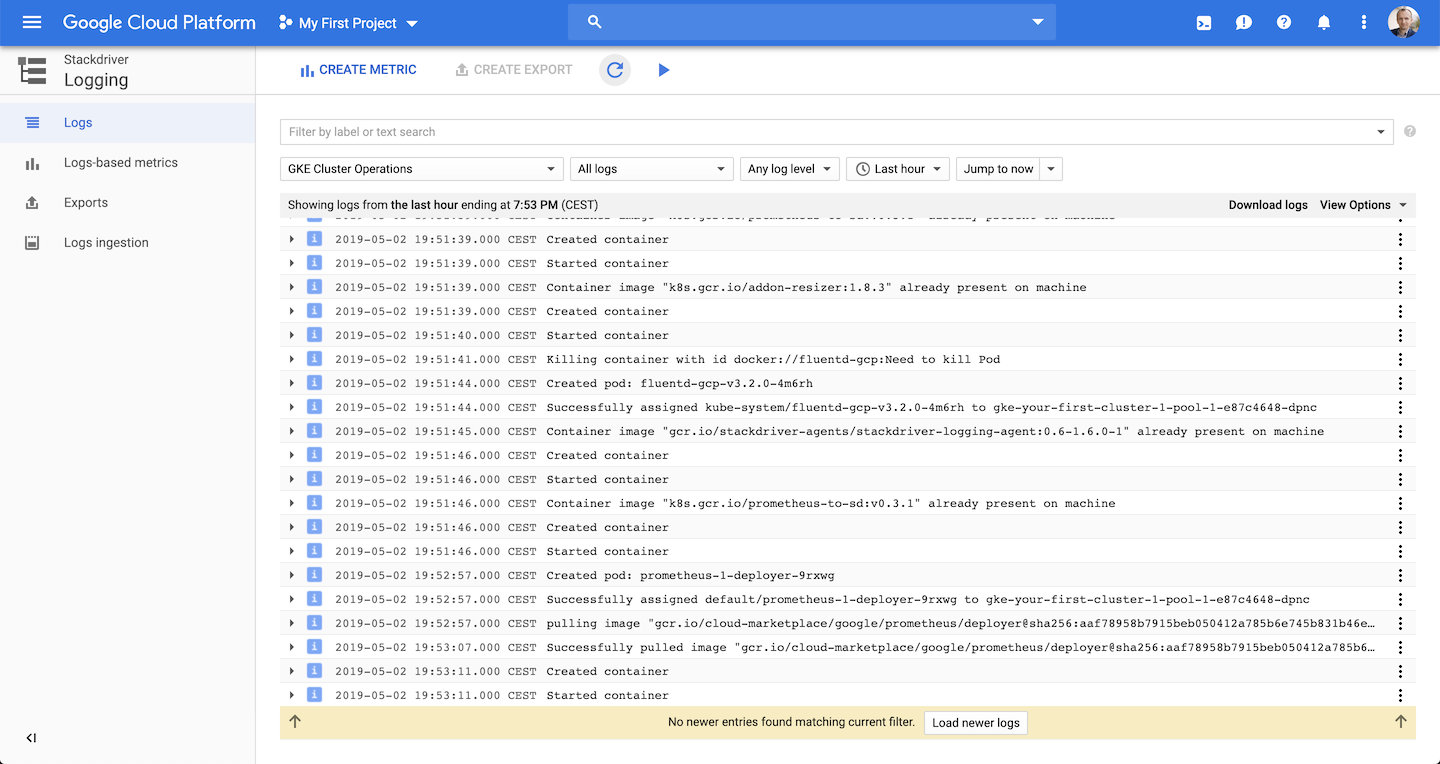

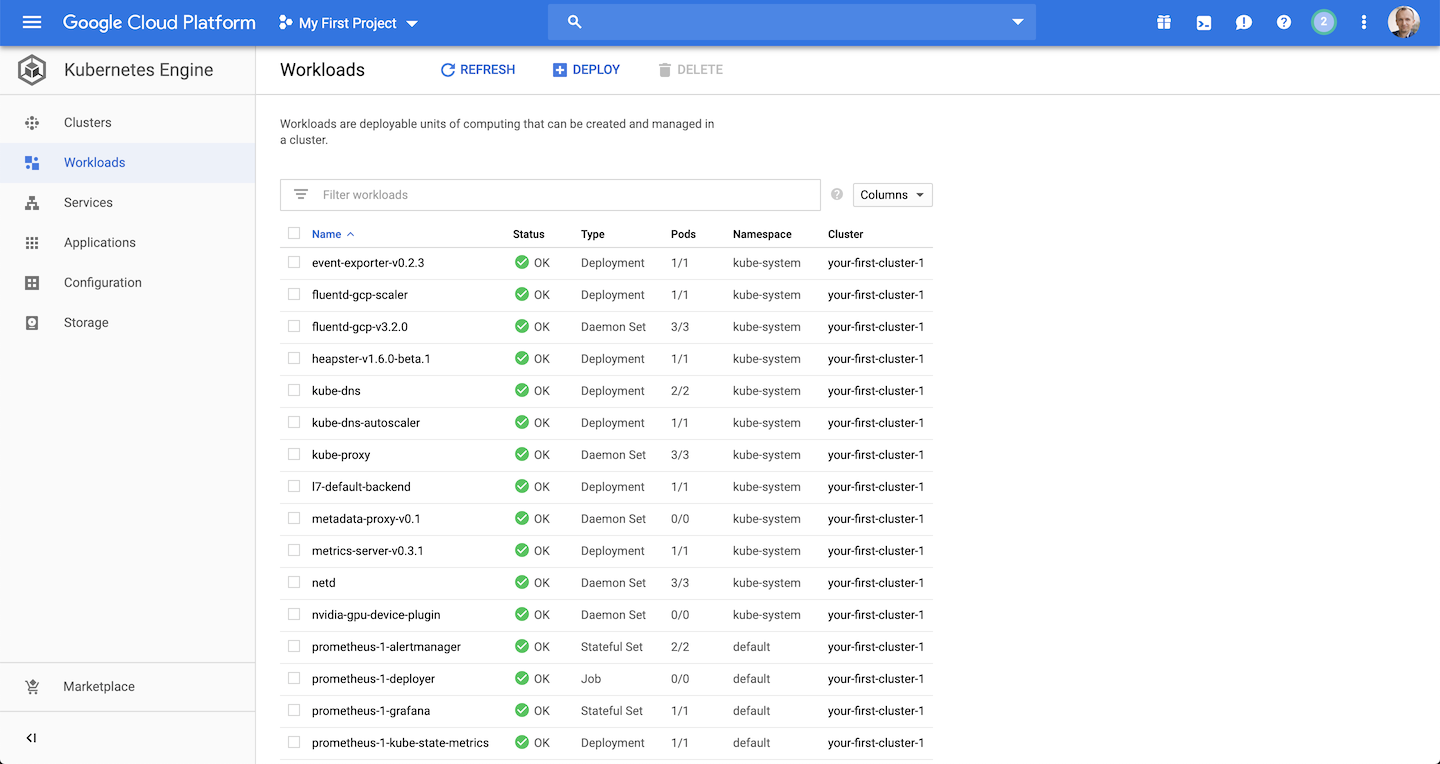

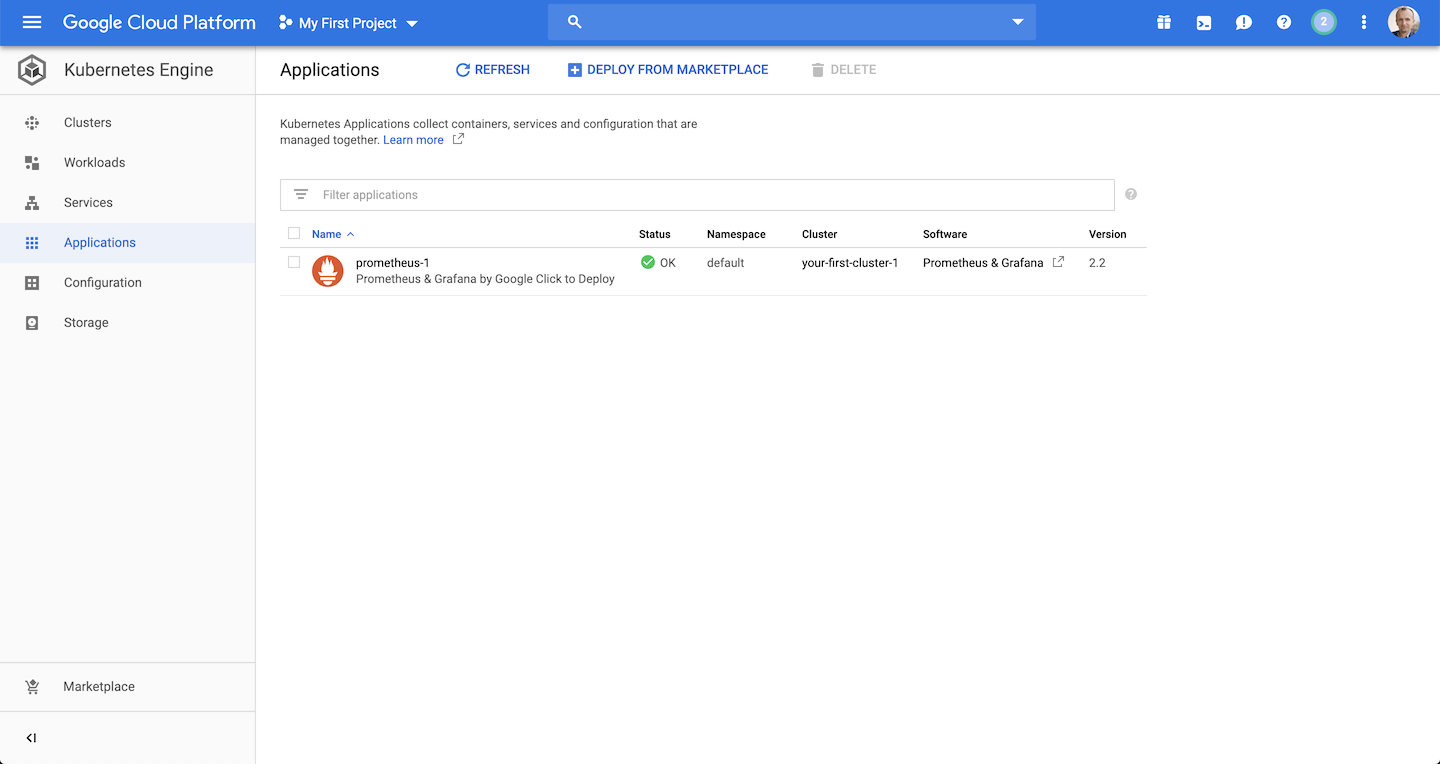

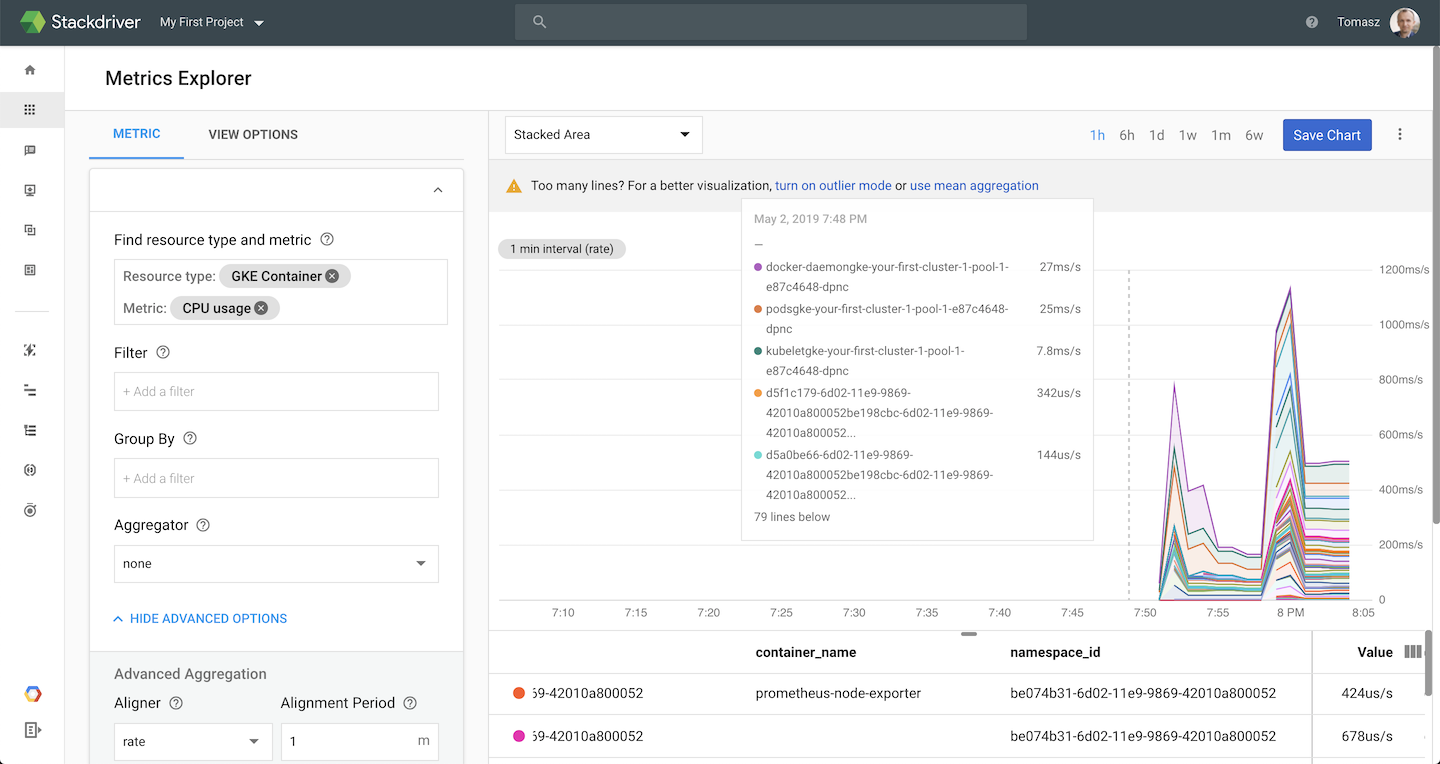

Nice looking interface

I use kubectl for 90% of the time when working with Kubernetes. The main reason is that I’m just a cli guy. The second is that standard Kubernetes Dashboard just sucks and it’s unusable for me - it’s more like html version of kubectl output with read-only capabilities. Look at OpenShift console - it’s just pretty and very user friendly. GKE is maybe not that nice, but certainly is far better than dashboard and it’s the first time I actually started deployments from GUI rather than from my terminal. Just look at those:

By the way - I have my own personal conspiracy theory. I believe that Kubernetes Dashboard project hasn’t changed a lot, because it’s a component that is a separate service on most of hosted solutions and vendors (like Google) wants to attract people with their fancy UI in contrast to this plain dashboard.

It’s cheap!

I would expect that Google monetizes its experience and is charging a lot for their Kubernetes service. Boy, I was so wrong - it’s the cheapest option available! I haven’t done thorough calculations, but you can visit this site for more details. What is surprising is that on GKE you pay only for nodes and not for a control plane (master servers, etcd) which is the hardest and most painful part to manage. Did I mention that you can even decrease your bill by using preemptible nodes with just a single option checked in your node pool configuration? Awesome!

A few drawbacks

Of course, there are some drawbacks. First one is a choice of Kubernetes versions available. At the time of writing the officially supported list ends with 1.12 while the newest one is 1.14. Second is that you need to know GCP pretty well to run Kubernetes in production, as there are many VPC and Cloud IAM related topics that affect your cluster security. Also, many features described above are in beta which means they are not supported and you can’t rely on Google support in case of any problem arises.

Conclusion

I’m a proud and proficient user of AWS, but what they’ve done with their own version of Kubernetes service (EKS) is a huge mistake. I’m not a fan of Azure, but I know that Azure Kubernetes Service is a lot better than EKS with some hiccups when it comes to stability (as probably most of Azure services unfortunately). Will try it soon, but for now, the best option for deploying Kubernetes in the cloud is GKE. Google strategy is quite straightforward - they want to dominate the market of containerized workloads. With Google Anthos product for GKE on-premise deployments and product quality they are (probably) destined for success.

Leave a comment