Honest review of OpenShift 4

We waited over 7 months for OpenShift Container Platform 4 release. We even got version 4.1 directly because Red Hat decided not to release version 4.0. And when it was finally released we almost got a new product. It’s a result and implication of acquisition of CoreOS by Red Hat announced at the beginning of 2018. I believe that most of the new features in OpenShift 4 come from the hands of a new army of developers from CoreOS and their approach to building innovative platforms.

But is it really that good? Let me go through the most interesting features and also things that are not as good as we’d expect from over 7-month development (OpenShift 3.11 was released in October 2018).

If ain’t broke, don’t fix it

Most parts of OpenShift haven’t changed or changed very little. In

my comparison of OpenShift and Kubernetes I’ve pointed out the most interesting features of it and there are also a few remarks on version 4.

To make it short here’s my personal list of the best features of OpenShift that just stayed at the same good level comparing to version 3:

- Integrated Jenkins - makes it easy to build, test and deploy your containerized apps

- BuildConfig objects used to create container images - with Source-To-Image (s2i) it is very simple and easy to maintain

- ImageStreams as an abstraction level that eases the pain of upgrading or moving images between registries (e.g. automatic updates)

- Tightened security rules with SCC that disallows running containers as root user. Although it’s a painful experience at first this is definitely a good way of increasing overall security level.

- Monitoring handled by the best monitoring software dedicated to container environments - Prometheus

- Built-in OAuth support for services such as Prometheus, Kibana and others. Unified way of managing your users, roles and permissions is something you’ll appreciate when you start to manage access for dozens of users

Obviously, we can also leverage Kubernetes features remembering that some of them are not supported by Red Hat and you’ll be on your own with any problems they may cause.

The best features

I’ll start with features I consider to be the best and sometimes revolutionary, especially when comparing it to other Kubernetes-based platforms or even previous version 3 of OpenShift.

New flexible and very fast installer

This is huge and probably one of the best features. If you’ve ever worked with Ansible installer available in version 3, then you’d be pleasantly surprised or even relieved you don’t need to touch it ever again. Its code was messy, upgrades were painful and often even small changes took a long time (sometimes resulting in failures at the end) to apply.

Now it’s something far better. Not only because it uses Terraform underneath (the best tool available for this purpose) for managing, is faster and more predictable, but also it’s easier to operate. Because the whole installation is performed by a dedicated operator all you need to do is provide a fairly short yaml file with necessary details.

Here’s the whole file that is sufficient to install a multi-node cluster on AWS:

apiVersion: v1

baseDomain: example.com

compute:

- hyperthreading: Enabled

name: worker

replicas: 3

platform:

aws:

rootVolume:

size: 50

type: gp2

type: t3.large

replicas: 2

controlPlane:

hyperthreading: Enabled

name: master

platform:

aws:

rootVolume:

size: 50

type: gp2

type: t3.xlarge

replicas: 3

metadata:

creationTimestamp: null

name: ocp4demo

networking:

clusterNetwork:

- cidr: 10.128.0.0/14

hostPrefix: 23

machineCIDR: 10.0.0.0/16

networkType: OpenShiftSDN

serviceNetwork:

- 172.30.0.0/16

platform:

aws:

region: us-east-1

pullSecret: '{"auths":{"cloud.openshift.com":{"auth":"REDACTED","email":"tomasz@example.com"}}}'

sshKey: |

ssh-rsa REDACTED tomasz@example.com

The second and yet more interesting thing about the installer is that it uses Red Hat Enterprise Linux CoreOS (RHCOS) as a base operating system. The biggest difference from classic Red Hat Enterprise Linux (RHEL) is how it’s configured and maintained. While RHEL is a traditional system you operate manually with ssh and Linux commands (sometimes they are executed by config management tools such as Ansible), RHCOS is configured with Ignite (custom bootstrap and config tool developed by CoreOS) at the start and shouldn’t be configured in any other way. That basically allows to create a platform that follows an immutable infrastructure principle - all nodes (except control plane with master components) can be treated as ephemeral entities and just like pods can be quickly replaced with fresh instances.

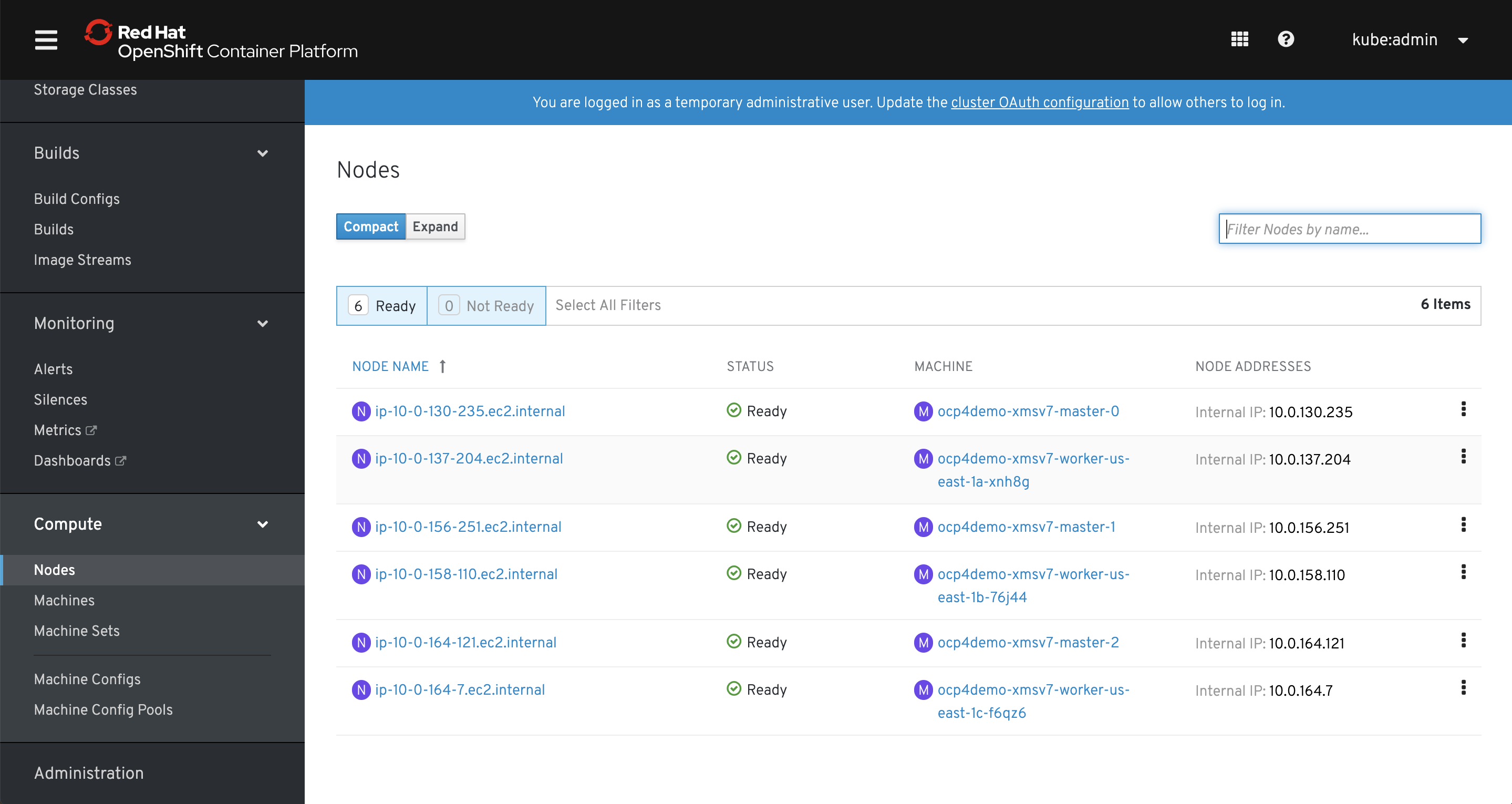

Unified way of managing nodes

Red Hat introduced a new API for node management. It’s called “Machine API” and is mostly based on Kubernetes Cluster API project. This is a game changer when it comes to provisioning of nodes. With MachineSets you can distribute easily your nodes among different availability zones but also you can manage multiple node pools (just like in GKE I reviewed some time ago with different settings (e.g. pool for testing, pool for machine learning with GPU attached). Management of the nodes has never been that easy! For me, that’s a game changer and I predict it’s going to be also a game changer for Red Hat. With this new flexible way of provisioning alongside with RHCOS as default system, OpenShift becomes very competitive to Kubernetes services available on major cloud providers (GKE,EKS,AKS).

Rapid cluster autoscaling

Thanks to the previous feature we can finally scale our cluster in a very easy and automated fashion. OpenShift delivers cluster autoscaling operator that can adjust the size of your cluster by provisioning or destroying nodes. With RHCOS it is done very quickly which is a huge improvement over the manual, error-prone process used in the previous version of OpenShift and RHEL nodes.

Not only does it work on AWS but also on-premise installation based on VMware vSphere. Hopefully, soon it will be possible on most major cloud providers and maybe on non-cloud environments as well (spoiler alert - it will, see below for more details).

We missed this elasticity feature and finally it minimizes the gap between those who are lucky (or simply prefer) to use cloud and those who for some reasons choose to build it using their own hardware.

Good parts you’ll appreciate

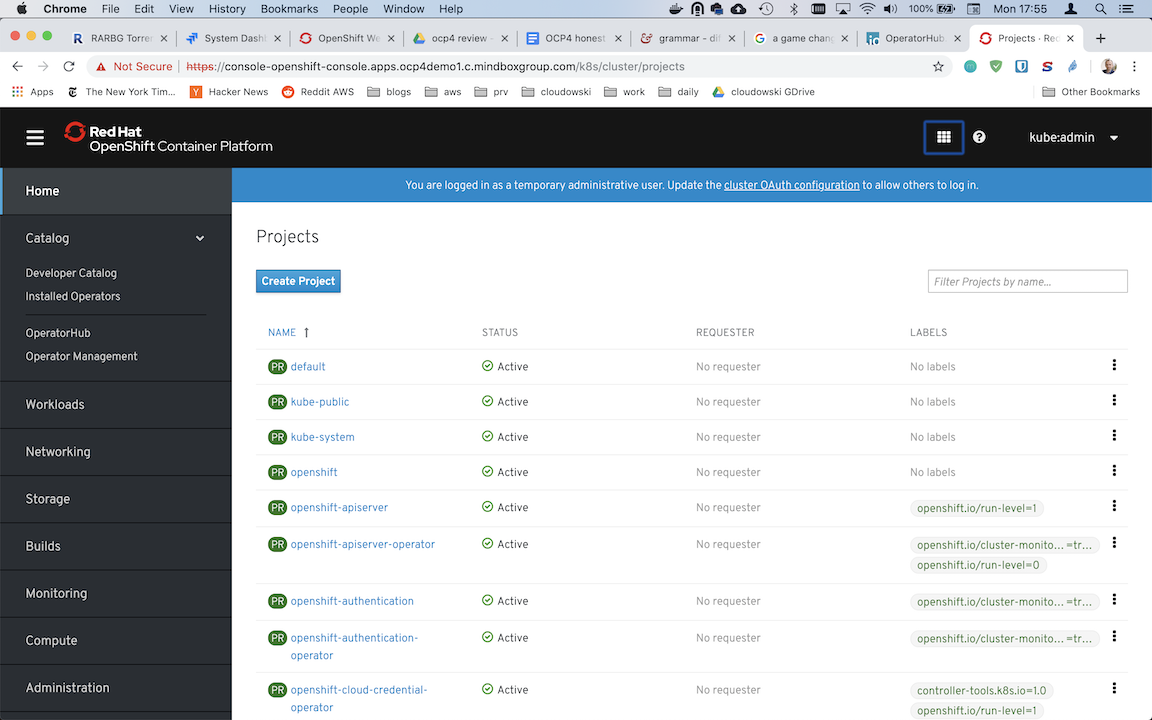

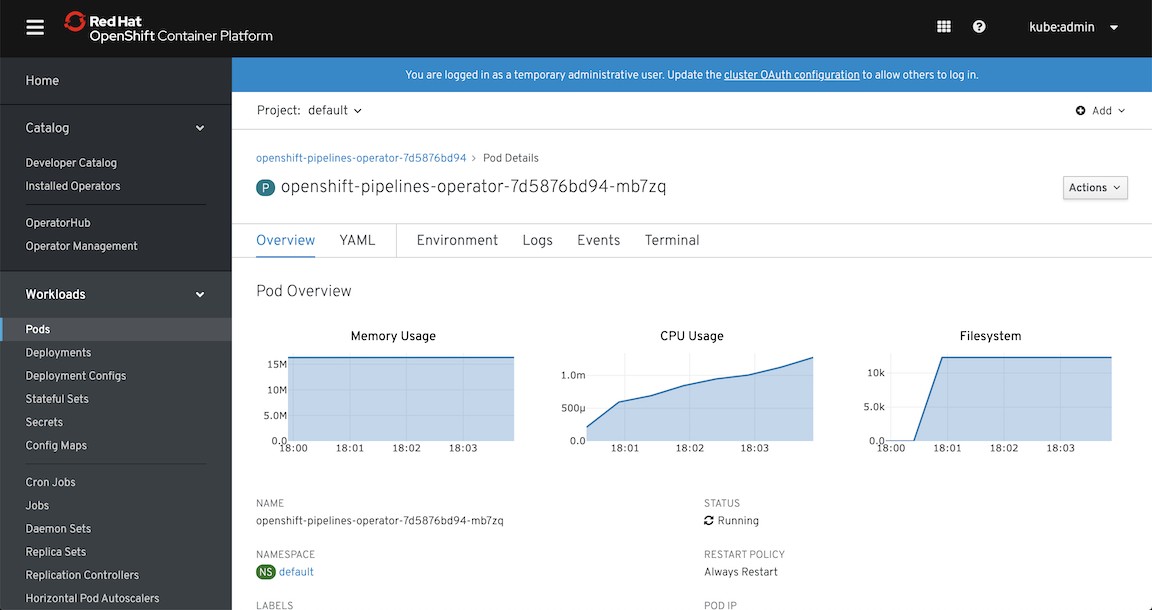

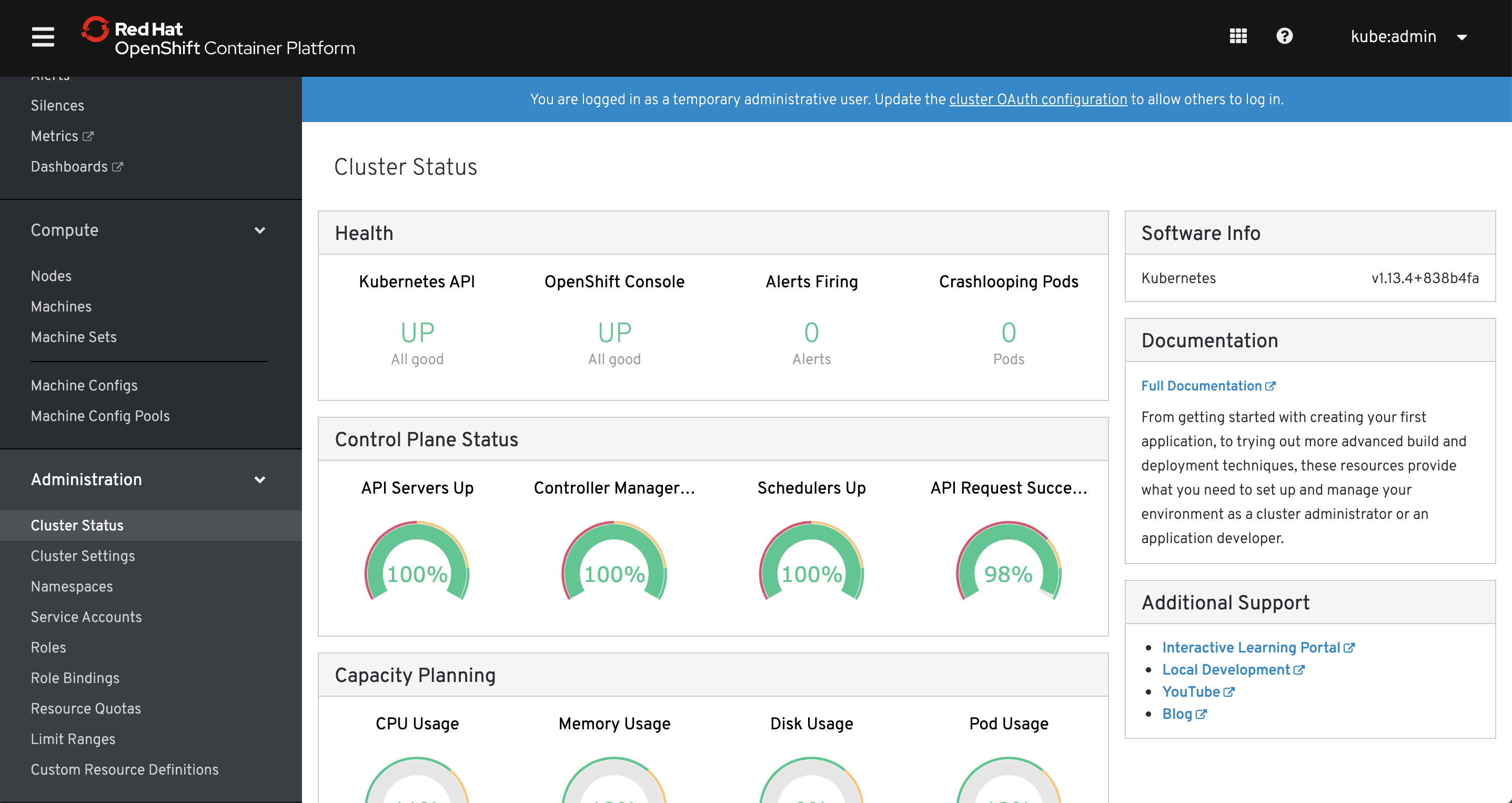

New nice-looking web console that is very practical

This is the most visible for end-user and it looks like it was completely rewritten, better designed, good looking piece of software. We’ve seen a part of it in version 3 responsible for cluster maintenance but now it’s a single interface for both operations and developers. Cluster administrators will appreciate friendly dashboards where you can check cluster health, leverage tighter integration with Prometheus monitoring to observe workloads running on it. Although many buttons open a simple editor with yaml template in it, it is still the best web interface available for managing your containers, their configuration, external access or deploying a new app without any yaml.

Red Hat also prepared a centralized dashboard (https://cloud.redhat.com) for managing all your OpenShift clusters. It’s quite simple at the moment but I think it’s an early version of it.

Oh, they also got rid of one annoying thing - now you finally log in once and leverage Single Sign-On feature to access external services i.e. Prometheus, Grafana and Kibana dashboards, Jenkins and others that you can configure with OAuth.

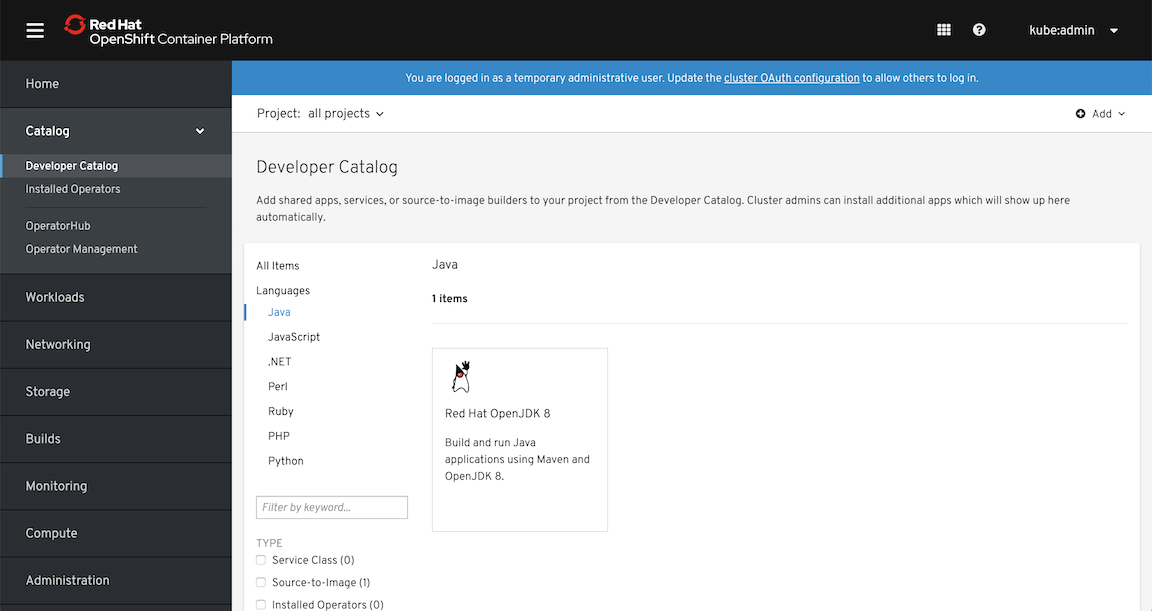

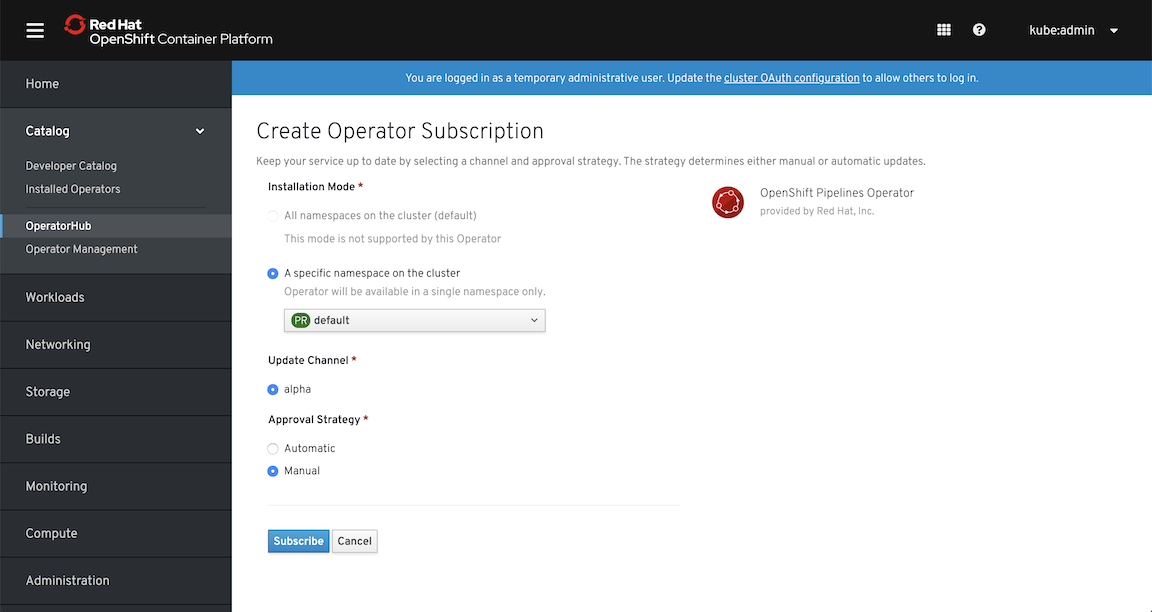

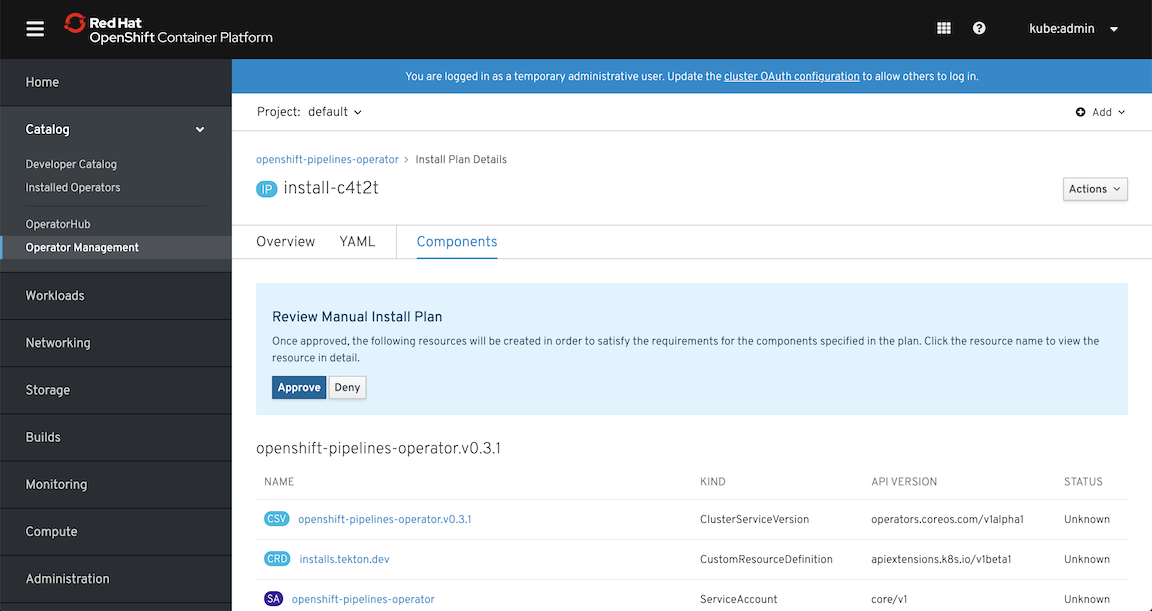

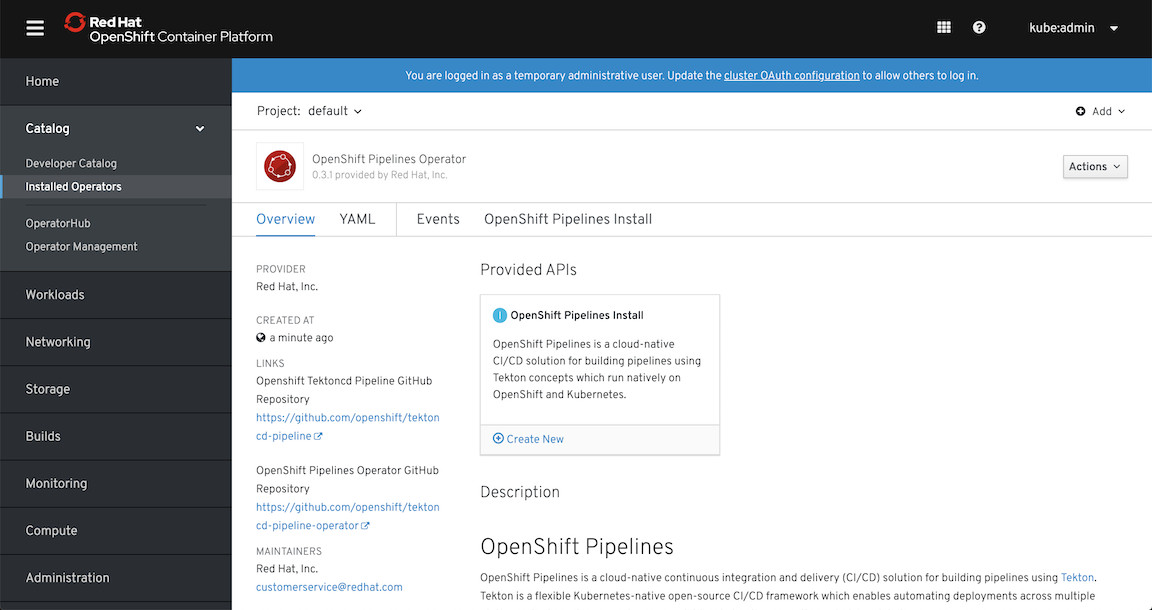

Operators for cluster maintenance and as first-class citizens for your services

Operator pattern leverage Kubernetes API and promises “to put operational knowledge into software” which for end-user brings an easy way for deploying and maintaining complex services. It’s not a big surprise that in OpenShift almost everything is configured and maintained by operators. After all, this concept was born in CoreOS and has brought us the level of automation we could only dream of. In fact, Red Hat deprecated its previous attempt to automate everything with Ansible Service Broker and Service Catalog. Now operators handle most of the tasks such as cluster installation, its upgrades, ingress and registry provisioning, and many, many more. No more Ansible - just feed these operators with proper yaml files and wait for the results.

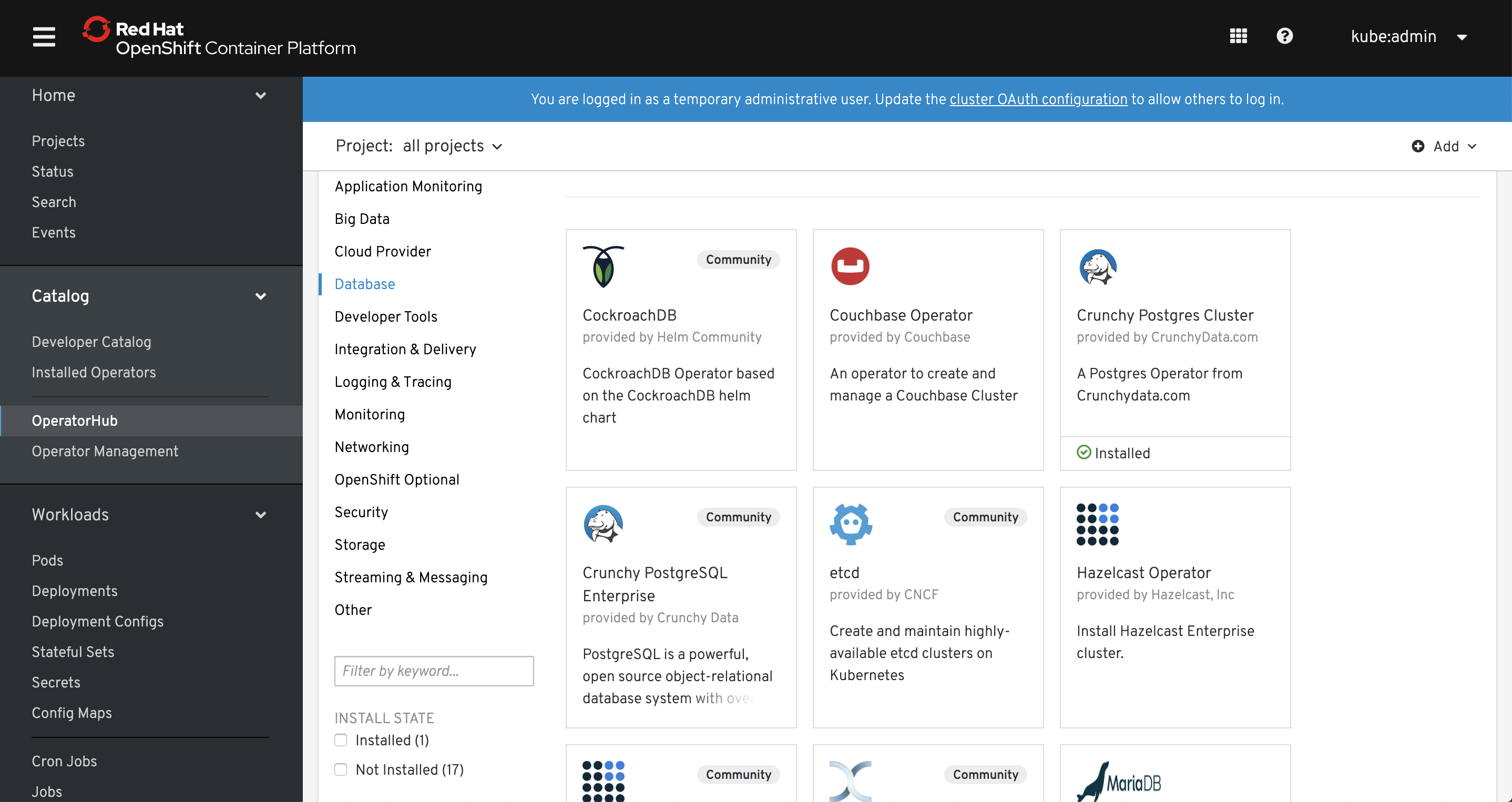

At the same time, Red Hat created a website with operators ( https://www.operatorhub.io/) and embedded it inside OpenShift. They say it will grow and you’ll be able to find there many services that are very easy to use. Actually, during the writing of this article, the number of operators available on OperatorHub has doubled and it will grow and become stable (some of them didn’t work for me or required additional manual steps).

For any interested in providing their software as operator there is operator-framework project that helps to build it (operator-sdk), run it and maintain it (with Operator Lifecycle Manager). In fact, you can start even without knowing how to write in golang, as it provides a way to create an operator using Ansible (and converts Helm Charts too). With some small shortcomings, it’s the fastest way to try this new way of writing kubernetes-native applications.

In short - operators can be treated as a way of providing services in your own environment similarly to the ones available on public cloud providers (e.g. managed database, kafka cluster, redis cluster etc.) with a major difference - you have control over the software that provides those services and you can build them on your own (become a producer) while on cloud you are just a consumer.

I think that essentially aligns perfectly with open source spirit that started an earlier revolution - Linux operating system that is the building block for most of the systems running today.

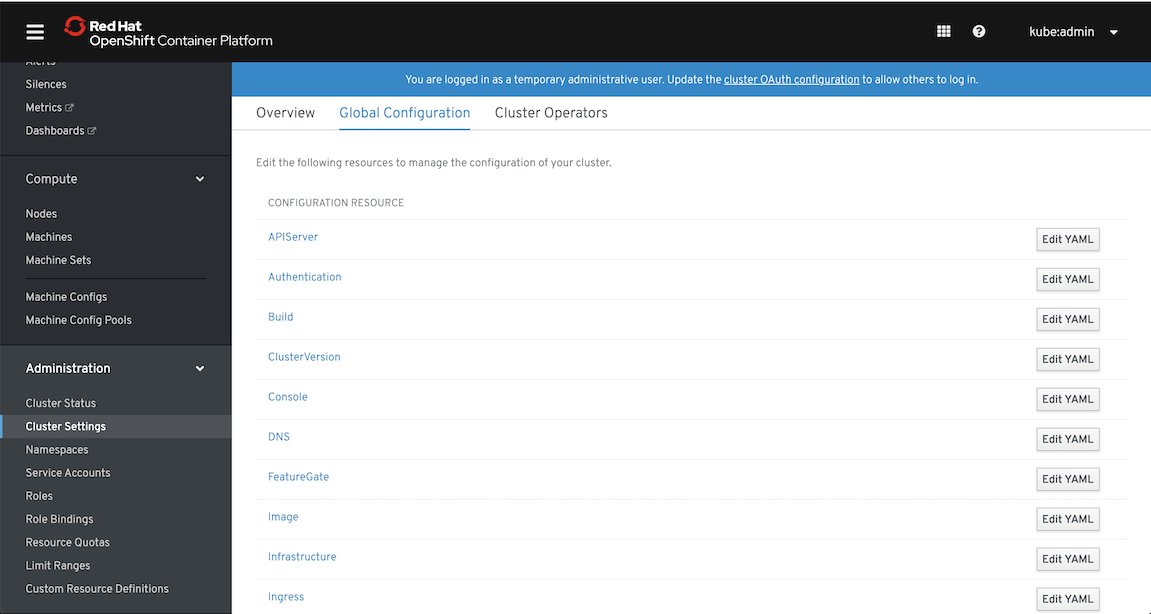

Cluster configuration kept as API objects that ease its maintenance

Forget about configuration files kept somewhere on the servers. They cause too many problems with maintenance and are just too old-school for modern systems. It’s time for “everything-as-code” approach. In OpenShift 4 every component is configured with Custom Resources (CR) that are processed by ubiquitous operators. No more painful upgrades and synchronization among multiple nodes and no more configuration drift. You’re going to appreciate how easy now maintenance has become. Here are the short list of operators that configure cluster components that were previously maintained in a rather cumbersome way (i.e. different files provisioned by ansible or manually):

- API server (feature gates and options)

- Nodes via Machine API (see above for more details)

- Ingress

- Internal DNS

- Logging (EFK) and Monitoring (Prometheus)

- Sample applications

- Networking

- Internal Registry

- OAuth (and authentication in general)

- And many more..

Now all these things are maintained from code that is (or rather should be) versioned, audited and reviewed for changes. Some people call it GitOps, I myself call it “Everything as Code” or to put it simply - the way it should be managed from the beginning.

Bad parts (or not good enough yet)

Nothing is perfect, even OpenShift. I’ve found a few things that I consider to be less enjoyable than previous features. I suspect and hope they will improve in future releases, but at the time of writing (OpenShift 4.1) they spoil this overall picture of it.

Limited support for fully automatic installation

Biggest disappointments of it - list of supported platform that leverages automatic installation. Here it is:

- AWS

- VMware vSphere

Quite short, isn’t it? It means that when you want to install it on your own machines you need to have vSphere. If you don’t then be prepared for a less flexible install process that involves many manual steps and is much, much slower.

It also implies another flaw - without a supported platform, you won’t be able to use cluster autoscaling or even manual scaling of machines. It will be all left for you to manage manually.

This makes OpenShift 4 usable only on AWS an vSphere. Although it could work anywhere, it is a less flexible option with a limited set of features. Red Hat promises to extend the list of supported platforms in future releases (Azure,GCP and OpenStack is coming in version 4.2) - there are already existing implementation also for bare metal installations so hopefully, this will be covered as well.

You cannot perform disconnected installations

Some organizations have very tight security rules that cut out most of the external traffic. In previous version, you would use a disconnected installation that could be performed offline without any access to the internet. Now OpenShift requires access to Red Hat resources during installation - they collect anonymized data (Telemetry) and provide a simple dashboard from which you can control your clusters.

They promise to fix it in upcoming version 4.2 so please be patient.

Istio is still in Tech Preview and you can’t use it in your prod yet

I’m not sure about you but many organizations (and individuals like me) have been waiting for this particular feature. We’ve had enough of watching demos, listening to how Istio is the best service mesh and how many problems it will address. Give us stable (and in case of Red Hat also supported) version of Istio! According to published roadmap It was supposed to be available already in version 4.0 but it wasn’t released so we obviously expected it to be GA in 4.1. For many, this is one of the main reasons to consider OpenShift as enterprise container platform for their systems. I sympathize with all of you and hope this year we’re all going to move Istio from test systems to production. Fingers crossed!

CDK/Minishift options missing makes testing harder

I know it’s going to be fixed soon but at the moment the only way of testing OpenShift 4 is either use it as a service (OpenShift Online, Azure Red Hat OpenShift) or install it which takes roughly 30 minutes. For version 3 we have Container Development Kit (or its open source equivalent for OKD - minishift) which launches a single node VM with Openshift and it does it in a few minutes. It’s perfect for testing also as a part of CI/CD pipeline.

Certainly, it’s not the most coveted feature but since many crucial parts have changed since version 3 it would be good to have a more convenient way of getting to know it.

UPDATED on 30.8.2019 - there is a working solution for single node OpenShift cluster. It is provided by a new project called CodeReady Containers and it works pretty well.

Very bad and disappointing

Now this is a short “list” but I just have to mention it since it’s been a very frustrating feature of OpenShift 3 that just happened to be a part of version 4 too.

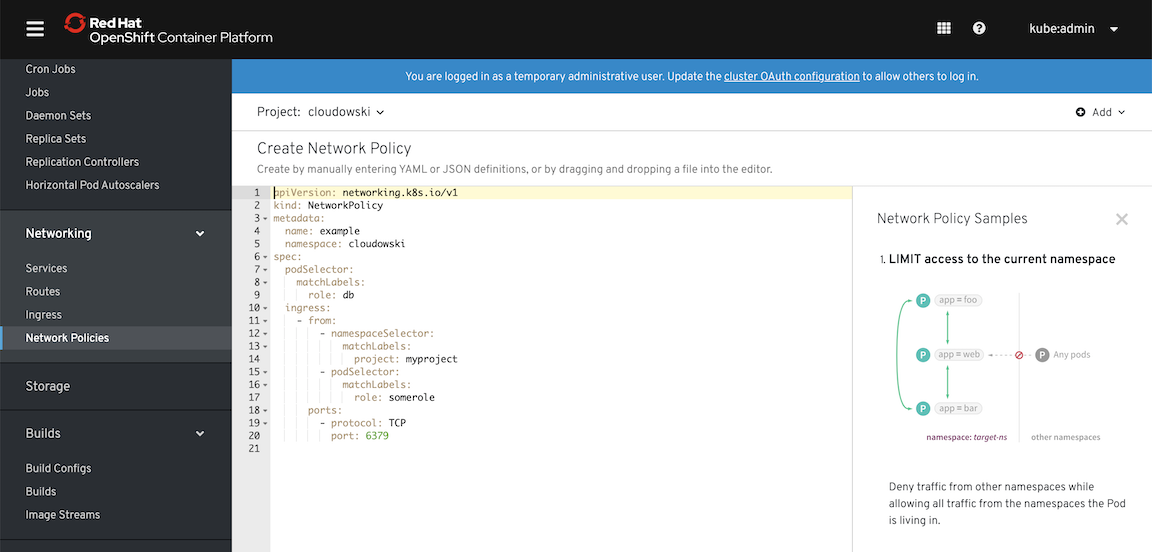

Single SDN option without support for egress policy

I still can’t believe how the networking part has been neglected. Let me start with a simple choice or rather lack of it. In version 3 we could choose Calico as an SDN provider alongside with OpenShift “native” SDN based on Open vSwitch (overlay network spanned over software VXLAN ). Now we have only this single native implementation but I guess we could live with it if it was improved. However, it’s not. In fact when deploying your awesome apps on you freshly installed cluster you may want to secure your traffic with NetworkPolicy acting as Kubernetes network firewall. You even have a nice guide for creating ingress rules and sure, they work as they should. If you want to limit egress traffic you can’t leverage egress part of NetworkPolicy, as for some reason OpenShift still uses its dedicated “EgressNetworkPolicy” API which has the following drawbacks:

- You should create a single object for an entire namespace with all the rules - although many can be created, only one is actually used (in a non-deterministic way, you’ve been warned) - no internal merge is being done as it is with standard, Kubernetes NetworkPolicy objects

- You can limit only traffic based on IP CIDR ranges or DNS names but without specifying ports (sic!) - that’s right, it’s like a ‘80s firewall appliance operating on L3 only…

I said it - for me, it’s the worst part of OpenShift that makes the management of network traffic harder. I hope it will be fixed pretty soon and for now Istio could potentially fix it on an upper layer. Oh wait, it’s not supported yet..

Summary

Was it worth waiting for OpenShift 4? Yes, I think it was. It has some flaws that soon are going to be fixed and it’s still the best platform for Kubernetes workloads that comes with support. I consider this version as an important milestone for Red Hat and its customers looking for a solution to build a highly automated platform - especially when they want to do it on their own hardware, with full control and freedom of choice. Now with operator pattern so closely integrated and promoted, it starts to look like a really good alternative to the public cloud, something that was promised by OpenStack and it looks like it’s going to be delivered by Kubernetes with OpenShift.

Leave a comment